By SIRMA

How far can you take a single vocal performance? With smart AI voice cloning tools, the possibilities are nearly endless.

Recording rich vocal stacks often takes hours to get right. There’s a thin line between utter chaos and a majestic wall of sound. Multiple layers, perfectly aligned and tuned, must coexist harmoniously. It’s not an easy task to accomplish single-handedly.

Many vocal producers turn to traditional processors in times like these, such as vocoders, harmonizers, and formant shifters. But none capture the timbre and natural expression of a human voice quite like Dreamtonics’ Vocoflex. With it, you can transform your voice into a vast variety of virtual background singers. Here’s how.

What is AI Voice Cloning?

Much like human singers doing impressions of others’ vocal styles, AI voice cloning tools are designed to imitate. They don’t directly process recordings like typical audio effects do. Instead, they generate a new vocal performance that sounds like a specific human voice.

To do so, they analyze the linguistic and acoustic properties of the voice. How quickly does the singer breathe? What kind of accent do they have? Is their tone breathy or tense? How fast or slow is their vibrato? These are some of the questions AI modelers find answers to before generating a digital clone.

Often, lengthy samples from various recordings are needed to decode the makeup of a single voice. But with Vocoflex, importing just 10 to 20 seconds of audio is enough. Use the built-in Voice Generator, and you’ll hear the transformation instantly. Whether you’re working in the studio or performing live, you can morph your voice as many times as you want. No text-to-speech or MIDI programming required.

What Came Before AI Voice Cloning in Music

Decades ago, vocal synthesis was far more limited. Mainstream vocoders analyzed frequency bands and amplitude to produce voices that sounded like a blend of human and machine. They were all the rage when they first came out in the 1970s.

As the years went on, software versions made vocoder synthesis even more accessible and popular. Taylor Swift opened her hit “Delicate” with her vocoded vocals. Zedd, Maren Morris, and Grey fortified the chorus sections of their masterpiece, “The Middle”, with a vocoder. Today, this special effect is more accessible than ever, and it remains a popular technique in music production.

After vocoders, harmonizers emerged, offering more natural-sounding results. By copying and shifting the pitch of a vocal recording, harmonizers could generate chords in real time. They could also be MIDI-triggered, which made them a compelling alternative to vocoders.

Imogen Heap’s iconic a cappella track, “Hide and Seek”, put vocal harmonizers on the map, and soon, other artists followed. This type of processing required accuracy in pitch, a clean tone, and consistent dynamics to work well. Despite their limitations, however, harmonizers eventually became a superpower for indie artists. After all, they eliminated the need for backing singers, which simplified the logistics of touring.

Meanwhile, formant shifters introduced a new kind of vocal manipulation. Unlike pitch shifters, these tools could alter vowel shapes and the tonal quality of a human voice.

The concept drew inspiration from slowing down and speeding up magnetic tape. Slow the tape down, and you would hear the music playing back at a lower pitch with a darker tone. Speed it up, and you would hear the opposite effect.

A similar type of speed-based pitch control has been available to DJs on turntables for decades. But vocal formant-shifting plugins that worked independently of tempo and pitch only began to pop up in the 2010s. Frank Ocean’s “Nikes” created a ripple effect in the industry and soon, more artists were formant-shifting their vocals to hop on the trend.

Eventually, producers discovered that adjusting the formants of background layers could also enhance the blend of vocal stacks. Mixing multiple takes from a single voice proved challenging. Excessive resonance and a lack of impact were common issues. But altering the formant of each double and harmony layer created the illusion of a choir. And who doesn’t want that?

Vocoflex: A Vocal Transformer Powered by AI

Today, formant shifting is still mainly associated with gender morphing. For example, lowering the formant of a female vocal using an effect like Soundtoys’ Little AlterBoy or Waves’ Vocal Bender may result in an androgynous tone. However, this method will not deliver a convincing male vocal conversion. For that, you need an AI-powered transformer like Vocoflex

Like other vocal processors, Vocoflex works best with dry, clean vocal recordings. Effects like reverb, delay, or distortion can interfere with the modeling.

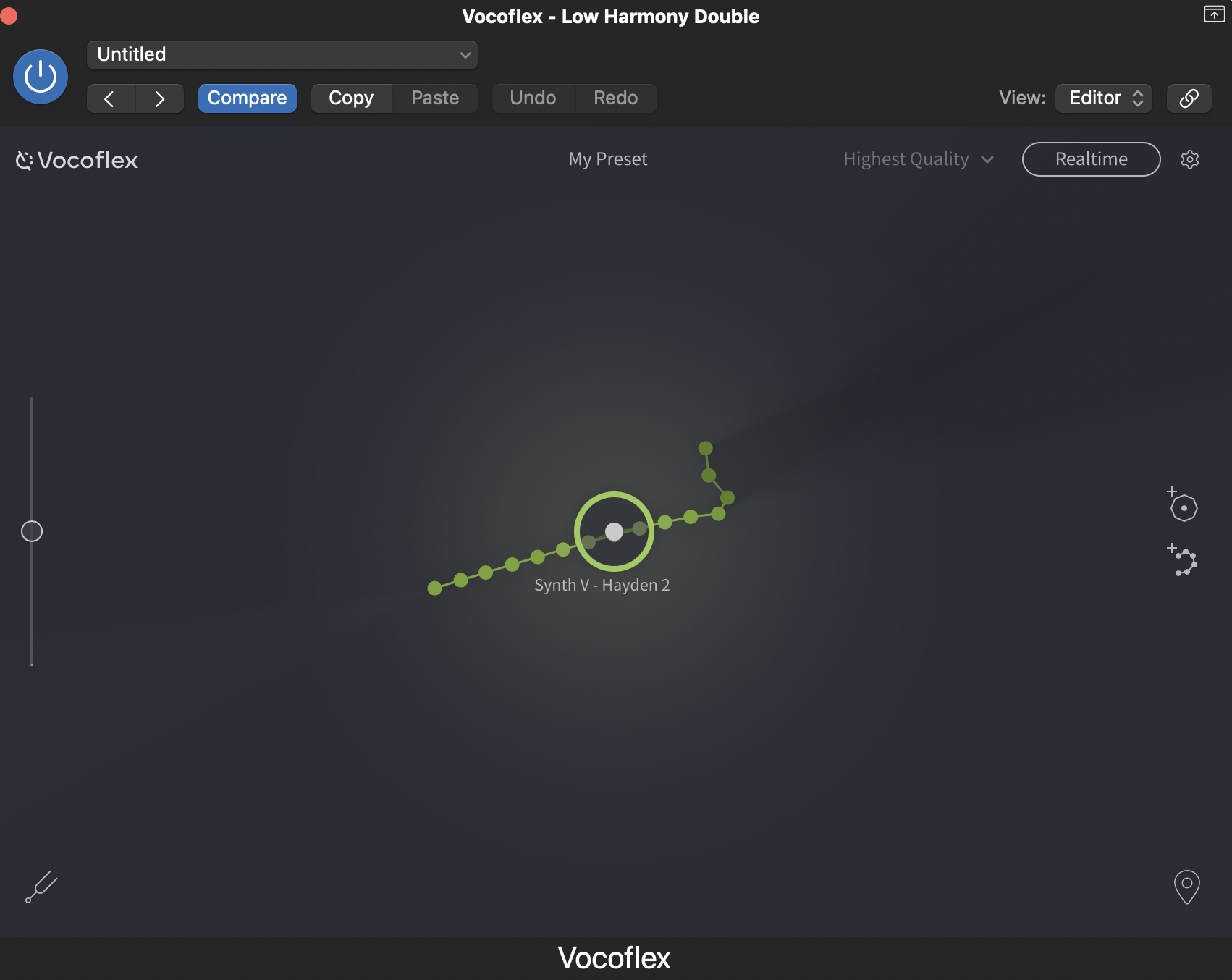

To begin, insert Vocoflex into your vocal chain and import one or more Target Voices. These can be excerpts of various human or AI voices you have permission to use. You can stick with a single target voice or blend multiple voices to achieve a more customized timbre.

The result? A new vocal that matches your pitch, rhythm, and phrasing — yet sounds like someone else. It’s like having a background singer who not only mirrors your delivery but also brings their own artistry to the mix.

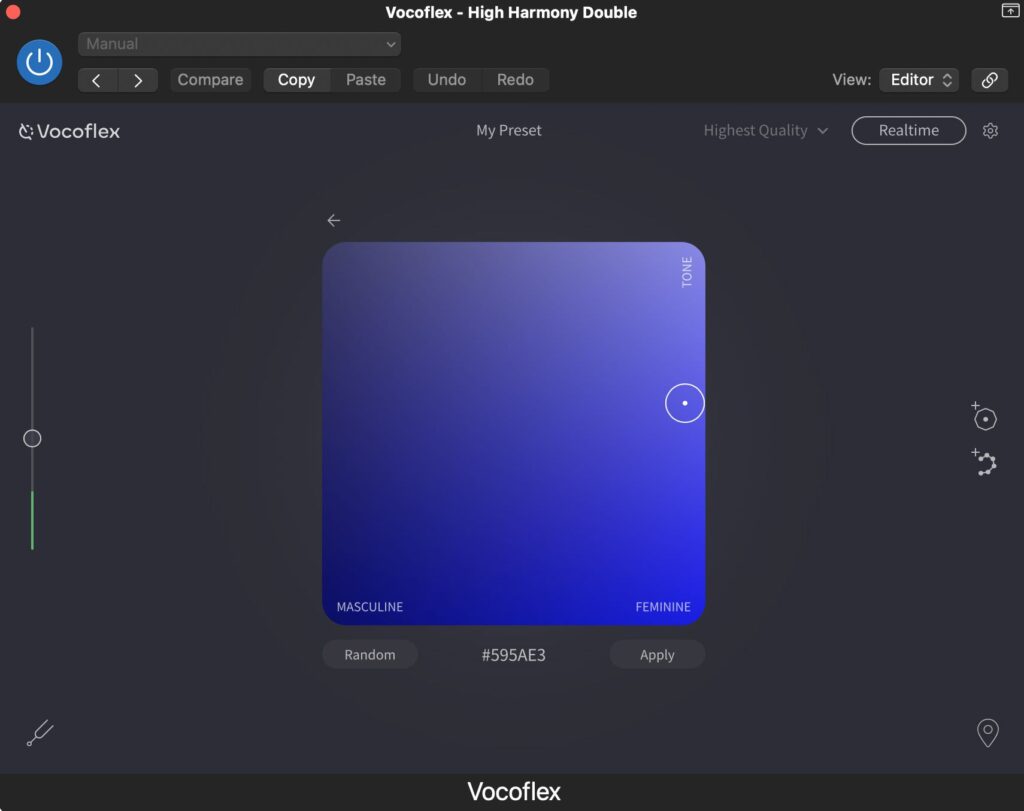

Even without a reference sample, you can fully transform your vocals using Vocoflex’s Voice Generator. To dial in the right vocal delivery for your production, move fluidly between the Masculine, Feminine, and Tone parameters. Want to explore a different sonic quality? The Random button can help uncover many distinctive vocal characters.

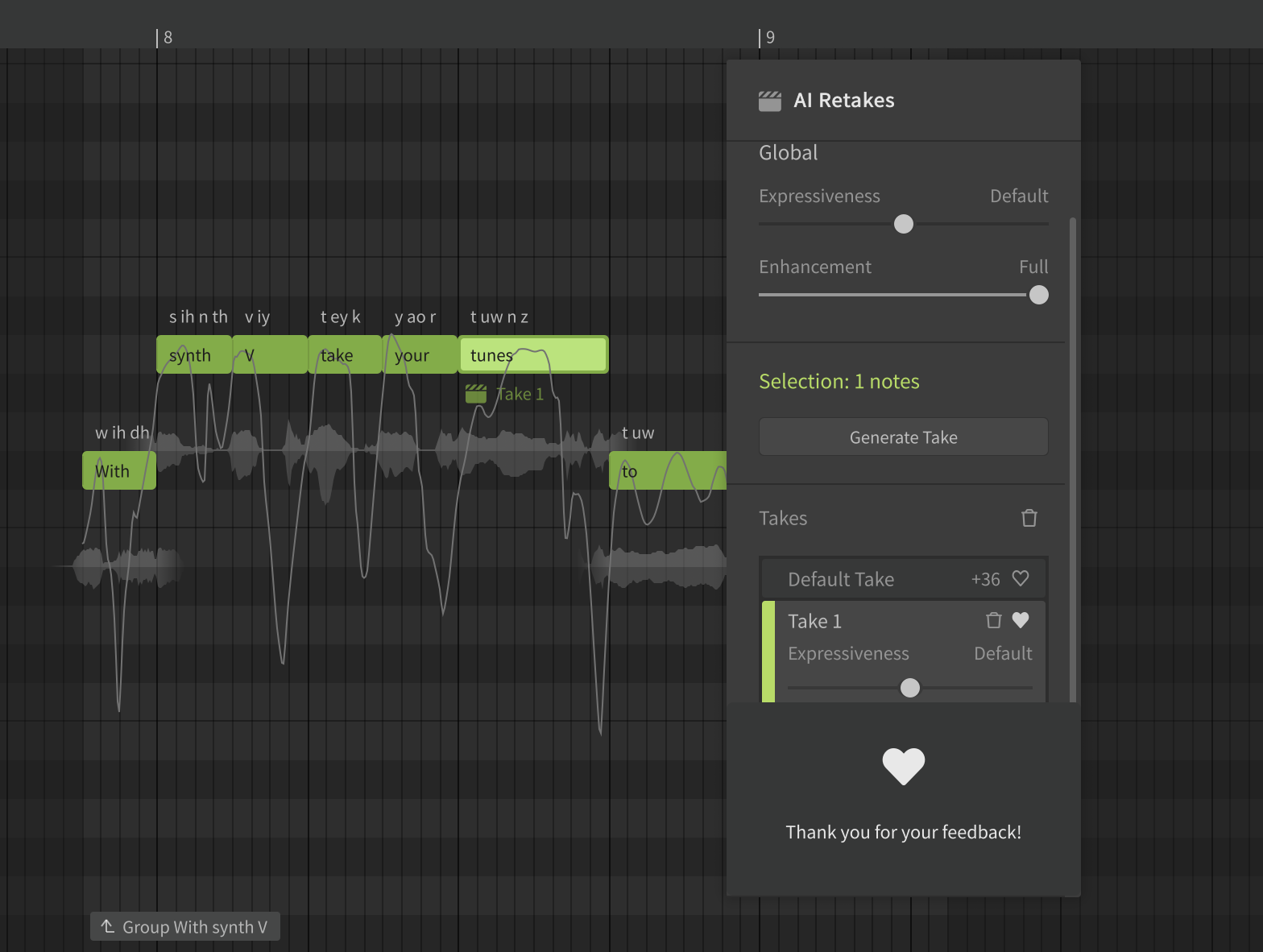

Unlike other audio effects on the market, Vocoflex can generate multiple versions of a vocal based on various minuscule instances of its performance. Some versions may sound breathy, while others feel fuller and more resonant.

Want to hear an example? Here’s how Vocoflex’s Voice Generator compares to a standard formant-shifting plugin.

Building Rich Vocal Stacks with Vocoflex

Vocal stacks are unavoidable in mainstream genres. From pop to R&B, catchy hooks and layered voices are often a package deal. Done right, they can make any singer sound larger than life.

This is why artists tend to spend the biggest chunk of their time in the studio perfecting their double and harmony takes. Each layer must match the flow, timing, and energy of the lead vocal. Otherwise, backing vocals can do more harm than good in polished productions.

In an intimate, folk setting, a single take of each vocal part may suffice. But for vocals to shine amidst busy synths and guitars, you need at least one double layer for each part. In some pop productions, the lead vocal can even be layered with five or more takes singing in unison.

But more vocals often mean more problems. When you’re on a deadline, the last thing you want is more time-consuming tasks on your plate. And in music production, audio editing is the most time-consuming of them all. Even convenient pitch correction and alignment tools like Melodyne, Auto-Tune, and Revoice Pro require manual labor to tighten a vocal stack.

With Vocoflex, you can cut that time in half — or less.

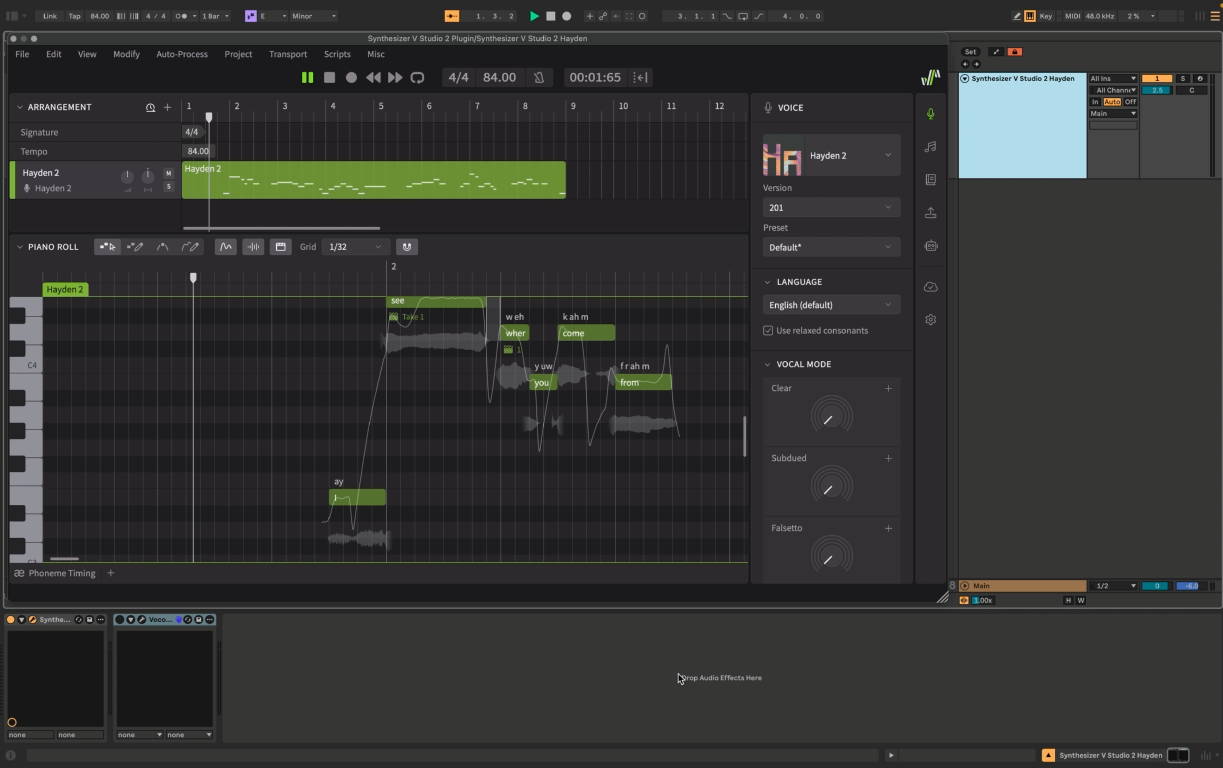

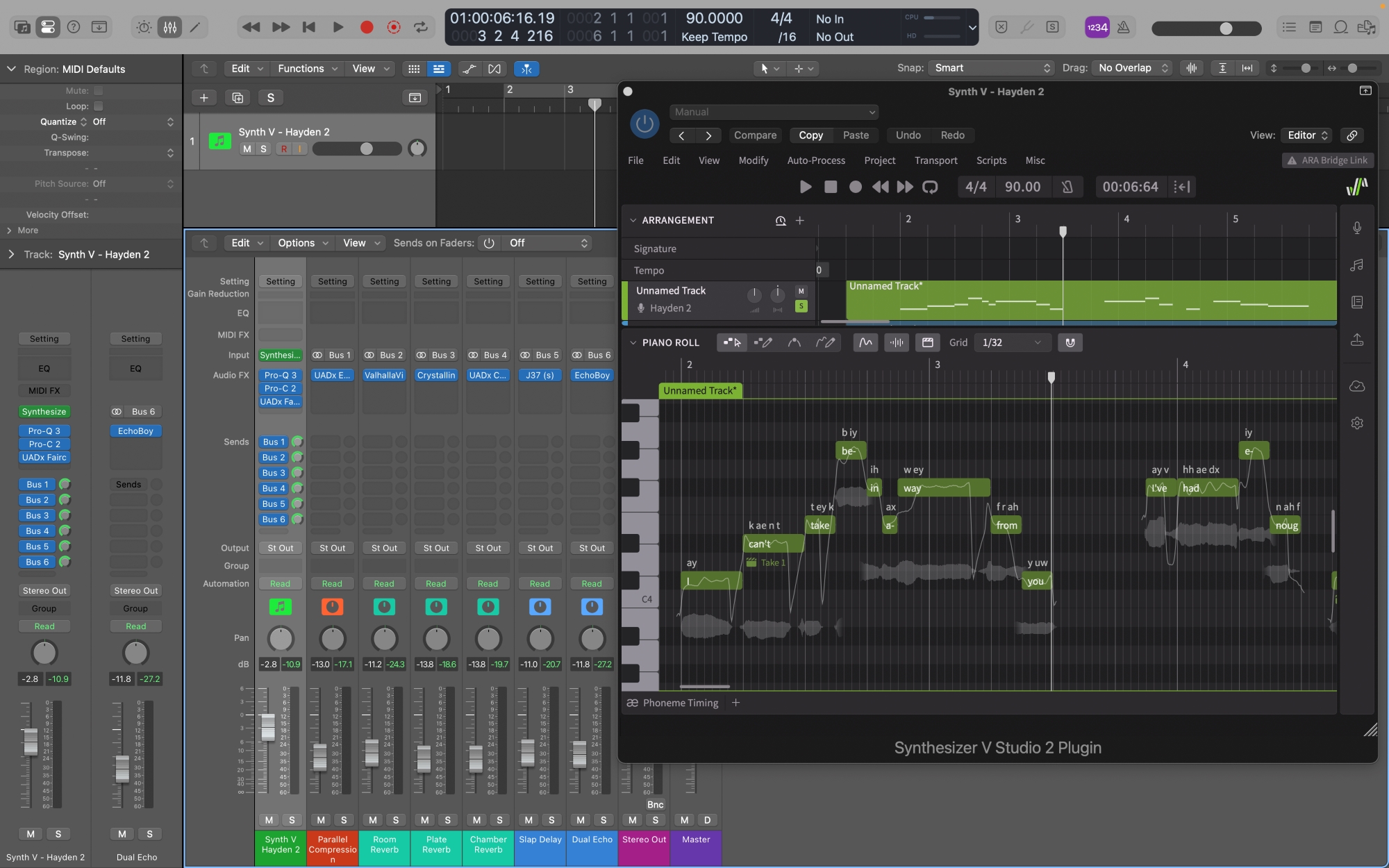

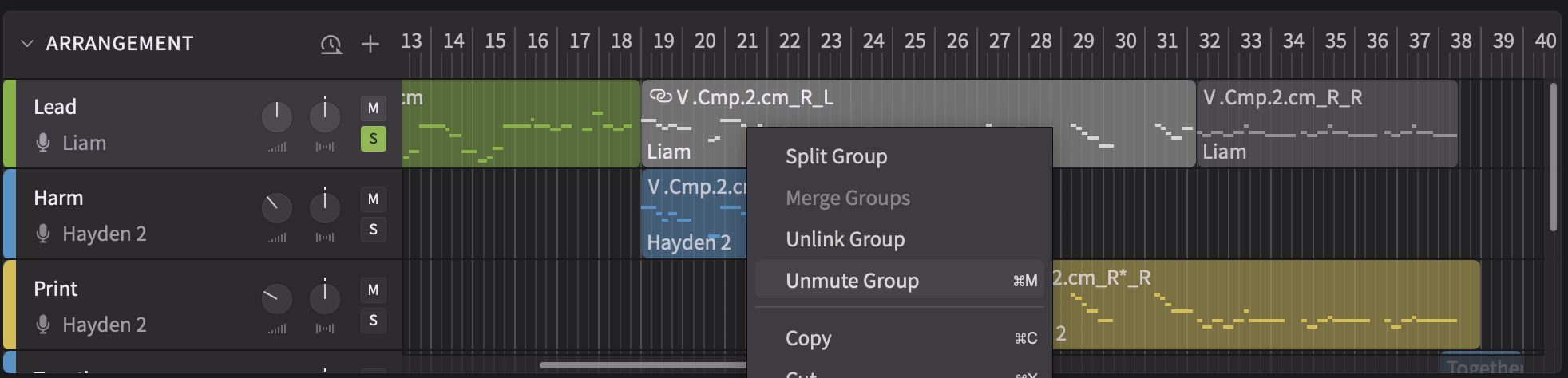

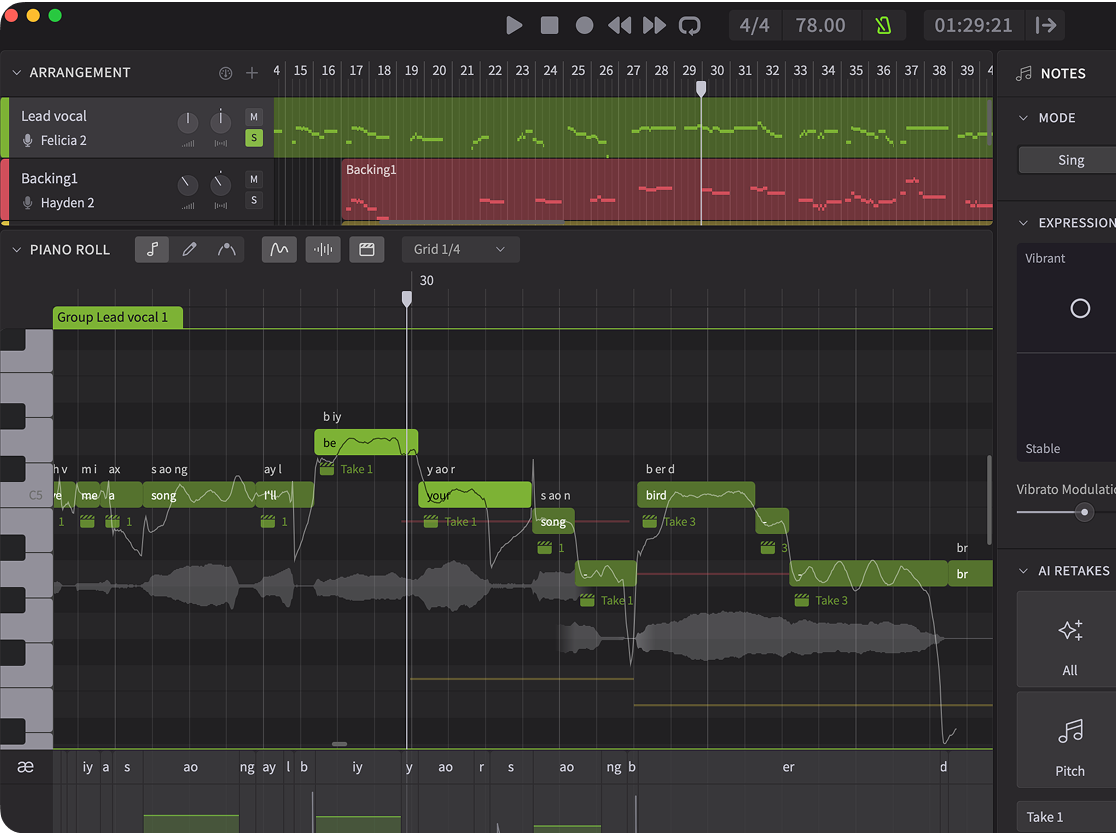

To put it to the test, I recorded three separate vocal performances: the lead vocal and harmony parts. On their own, they sounded good, but not as powerful as I would have liked.

First, I copied and pasted the lead vocal on two separate channels to generate two unique double layers. For these layers, I opted to create new timbres from scratch with the built-in Voice Generator. Establishing feminine and slightly breathy characters in the double layers added an airy quality to the mix.

Then, I copied and pasted the lead vocal on another channel. This time, I used Vocoflex to generate a layer an octave below the original register of my performance. I pitch-shifted the vocal -12 semitones in Vocoflex, and picked a masculine tone within the Voice Generator.

Next, I cloned each harmony vocal and panned them left and right for a wider stereo image.

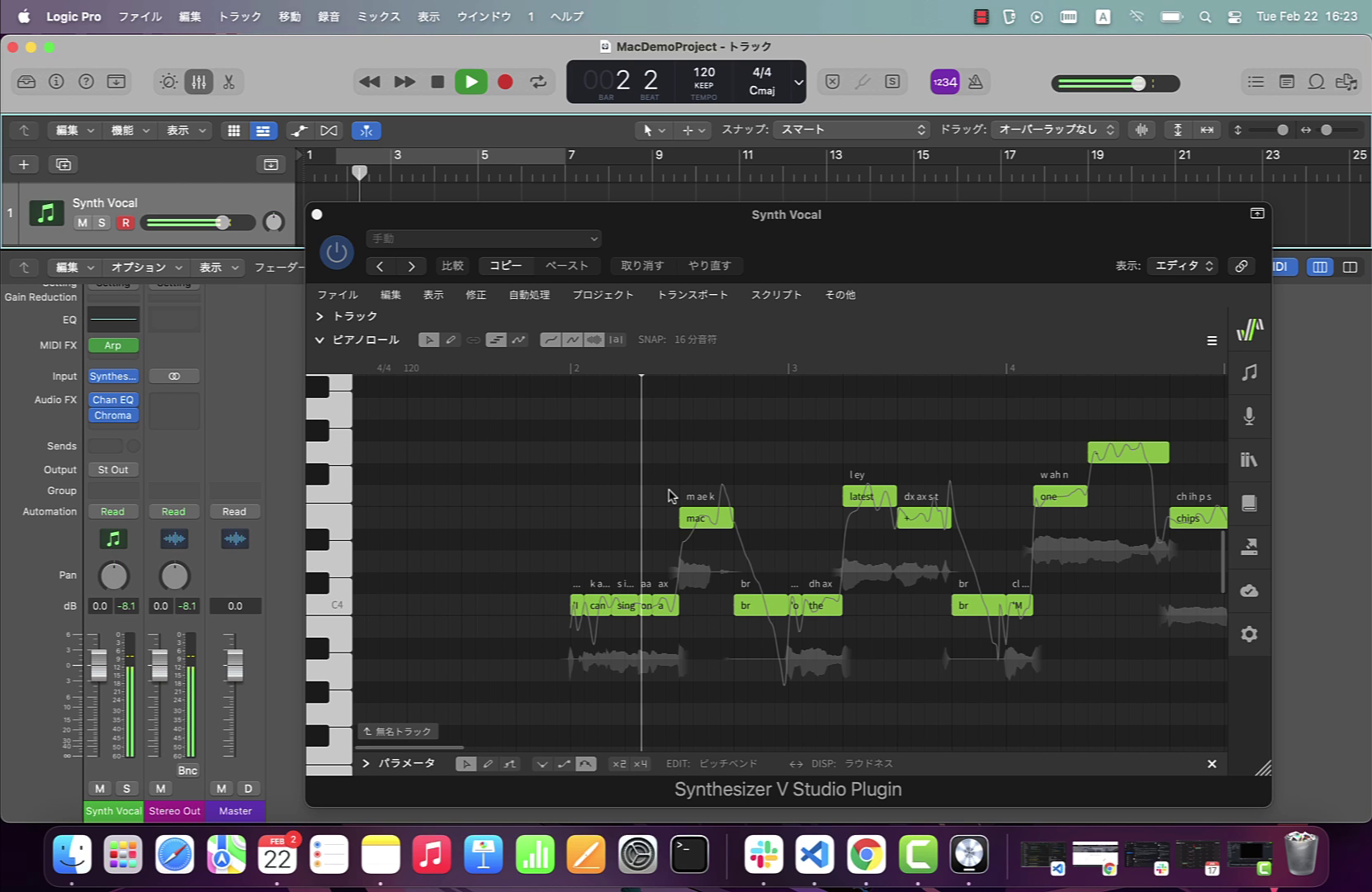

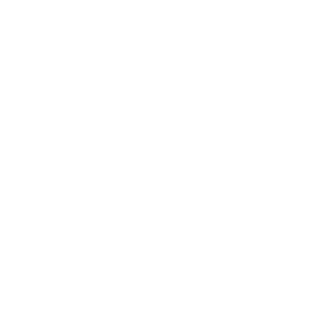

I wanted to hear Hayden 2, from Dreamtonics’ Synthesizer V voice database collection, singing the low harmony part. I rendered and bounced the excerpt that I composed with Hayden 2 in Synthesizer V. Then, I imported the audio into Vocoflex in Logic. This way, I could hear Hayden 2 sing with my own vocal delivery and accent.

As for the clone of the high harmony part, I went with a breathy and thin voice to round out the resonance of my own take.

Before I knew it, my three-part vocal harmony had become an eight-part stack worthy of a chorus section. All that was left to do was adjust the volume levels and panning knobs for a balanced and wide mix.

Unlocking Creativity with AI in Vocal Production

This is just one way AI technology can help unlock creativity in your vocal productions.

Some producers build even larger virtual choirs with Vocoflex. Others transform the gender of male vocals to pitch their songs to female artists instead.

Instrumentalists switch between different brands and models all the time. Now, vocalists can too. Why not discover what’s beyond the limits of your own vocal cords? Blend your voice with a couple of other AI-generated voices to achieve an inimitable timbre. Who knows — it may even lead to new ideas about the way you perform your music.

And if you’re a sound designer too, take this concept one step further by automating the settings in Vocoflex over time. With additional audio effects like reverb and delay, you can implement otherworldly vocal textures in your tracks.

Since Vocoflex can process nearly any type of monophonic audio signal, it puts a revolutionary talk box–like effect at your fingertips. Here’s a clip of Jordan Rudess playing electric guitar live through Vocoflex’s Realtime mode.

Designed to morph singing voices, Vocoflex excels in musical versatility. As it continues to evolve with each update, so will your creativity.

Curious to find out more? Discover how you can transform your vocals with Vocoflex today.